Disinformation in the Digital Age: A Historical Perspective and Contemporary Solutions

- by Diana Krasnova and Maria Grinavica

- Dec 3, 2024

- 4 min read

Updated: May 19, 2025

by Diana Krasnova and Maria Grinavica

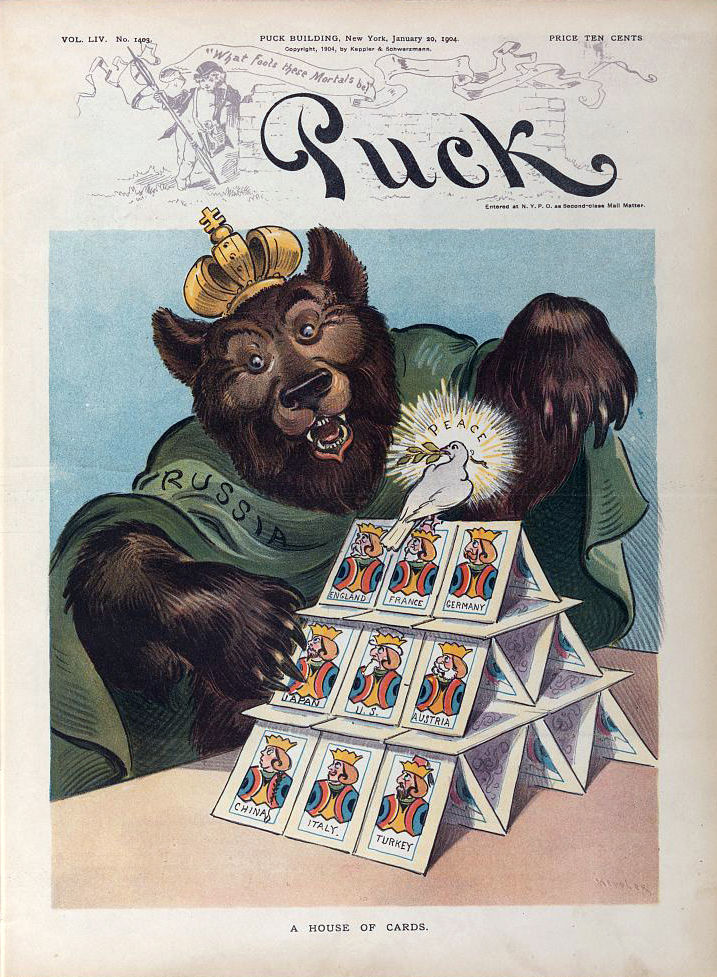

Disinformation – the deliberate dissemination of false or manipulated information to mislead – has existed as long as human communication. In ancient Greece, during the Peloponnesian War, strategic false narratives were used to mislead and demoralize opponents. These early examples, documented by Thucydides, an ancient Greek historian renowned for his critical and empirical approach to documenting the Peloponnesian War, highlight the enduring nature of disinformation in shaping public narratives and manipulating perception. The invention of the printing press in the 15th century revolutionized information dissemination but also enabled misinformation to spread at unprecedented scales. Governments and religious authorities controlled printed materials, often shaping public opinion to align with their agendas. By the 19th century, “yellow journalism” emerged – a term describing sensationalized and often exaggerated news reporting aimed at driving readership. It prioritized attention-grabbing headlines over accuracy, frequently distorting facts to evoke strong emotional reactions. This era of journalism underscores the enduring tension between commercial interests and the integrity of information.

In the modern era, disinformation has been magnified by digital technologies. Social media platforms, algorithms, and artificial intelligence (AI) now enable the creation and dissemination of false narratives at unparalleled speed and precision, raising concerns about their profound societal impacts.

What Are the Dangers of Disinformation? Disinformation campaigns exploit societal divides, amplify tensions, and erode trust in institutions. Politically motivated campaigns were prevalent in events such as the 2016 U.S. elections and Brexit. In these cases, coordinated efforts exploited social media algorithms to polarize societies and influence outcomes. During the 2016 U.S. elections, Russian-backed organizations, such as the Internet Research Agency, used fake social media accounts to spread divisive content, targeting specific demographics with tailored misinformation to sow discord. The Cambridge Analytica scandal on the other hand revealed how data from millions of Facebook users was harvested to create psychographic profiles, enabling highly targeted and manipulative political advertisements during both the U.S. elections and the Brexit referendum.

These campaigns often tap into societal fears and prejudices, creating narratives that appear credible but are deeply misleading. Social media platforms exacerbate these issues through echo chambers and algorithm-driven content, reinforcing preexisting beliefs and deepening ideological divides. Emotional triggers like fear and anger are weaponized, disrupting social cohesion and fostering radicalization. Historical grievances are similarly exploited to deepen discontent among communities.

One of the most insidious effects of disinformation is its erosion of trust in credible information sources. Traditional media, experts, and institutions have become targets of delegitimizing campaigns. Algorithms prioritizing engagement over accuracy further amplify false narratives, undermining public discourse and factual reporting. The societal impact is multifaceted, challenging democratic institutions, disrupting public health initiatives (as seen during the COVID-19 pandemic), destabilizing economies, and hindering scientific progress. The rise of AI and deepfake technologies has only exacerbated these dangers, creating highly convincing yet fabricated content that complicates the verification of authenticity.

The Role of AI and Measures to Counter Disinformation AI and deepfake technologies have transformed the disinformation landscape, elevating it to new levels of sophistication. Deepfakes use deep learning to create highly convincing yet fabricated audiovisual content, posing significant challenges for verifying authenticity. These technologies erode confidence in evidence-based communication and make it increasingly difficult to distinguish truth from fabrication. AI also enables the mass production of false narratives with unprecedented precision. Algorithmically tailored misinformation can target specific audiences effectively, exploiting their vulnerabilities and reinforcing biases. The rapid evolution of these technologies outpaces regulatory responses, making them a formidable tool for malicious actors seeking to manipulate public perception.

Addressing disinformation requires a multifaceted and multidisciplinary approach, with legal regulation playing a crucial role. Governments, technology companies, civil society, and international organizations must work collaboratively to create adaptive and enforceable frameworks. The European Union (EU) has been at the forefront of combating disinformation. The Digital Services Act (DSA), adopted in 2022, mandates transparency in algorithms, accountability for content moderation decisions, and cooperation with fact-checking organizations. Complementing this, the Strengthened Code of Practice on Disinformation introduces robust measures, including enhanced content moderation, support for independent fact-checking, and increased data sharing with researchers.

Globally, the United Nations emphasizes international cooperation. The UN Secretary-General’s 2022 report on disinformation underscores the importance of media pluralism, transparency in content moderation, and engaging civil society in policy development. Capacity-building initiatives, such as media literacy programs, are essential for empowering individuals to critically evaluate information. Organizations like NATO have integrated disinformation countermeasures into strategic defense frameworks, focusing on resilience against hybrid threats and fostering collaboration among member states. Similarly, the Council of Europe’s Budapest Convention on Cybercrime provides mechanisms for international cooperation, addressing the transnational nature of disinformation campaigns.

Despite these efforts, significant challenges remain. The transnational nature of disinformation complicates enforcement, as campaigns often originate from jurisdictions with weaker regulatory frameworks. Rapid technological advancements, particularly in AI, outpace legislative responses, leaving regulatory gaps. Addressing these challenges requires harmonizing international standards and enhancing cross-border collaboration, as emphasized by organizations like the OECD and the International Telecommunication Union. Strengthened legislation, coupled with global cooperation, is essential to mitigate the risks posed by disinformation and ensure the integrity of digital information ecosystems.

Disinformation is a persistent and evolving threat with deep historical roots and far-reaching consequences. Its ability to exploit social divides, undermine trust, and manipulate public opinion highlights the urgency of comprehensive strategies to counter its spread. Through regulatory frameworks, technological innovation, public education, and international collaboration, societies can foster resilience and safeguard democratic principles in the face of this complex phenomenon.

Diana Krasnova has a background

in Law and Economics and a pas-

sion for writing, specializing in the

Middle East, Latin America, and

Sub-Saharan Africa. She actively

participates in sustainable regio-

nal development and human rights

projects for the United Nations and

other organizations. As the founder

of a think-tank NGO, she is proud -

ly leading the first research team in

Latvia on ecocide.

Maria Grinavica holds an MBA and

is pursuing a Master’s degree in In-

ternational Cooperation, Finance,

and Development with expertise

in digital marketing, content crea-

tion, graphic design, and corporate

communications. She specializes

in sustainable development, educa-

tion, and entrepreneurship, actively

contributing to research and mana-

ging EU-funded projects.

Comments